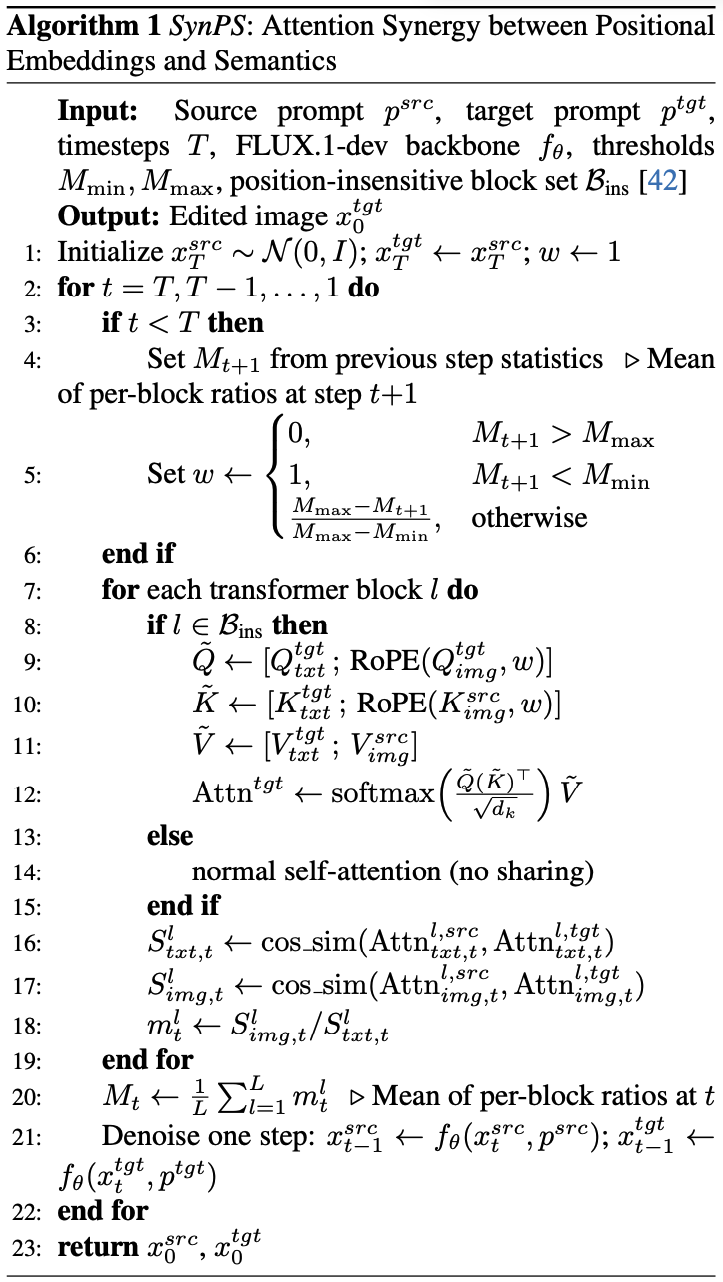

To tackle the challenge of determining when and how to apply positional embeddings effectively, we propose the SynPS method, which modulates Synergy between Positional embedding and Semantics in Attention for complex non-rigid image editing.

To determine when to apply positional embeddings, we introduce a metric to quantify the editing magnitude. We compute the cosine similarity between the source and target attention outputs for both text tokens (Sltxt,t) and image tokens (Slimg,t) at each block l and timestep t. Sltxt,t inversely reflects the desired semantic change, while Slimg,t measures the current visual alignment. We define the overall editing measurement Mt as the average ratio of these similarities across L blocks:

\[

M_t = \frac{1}{L}\sum_{l=1}^{L}\frac{S^{l}_{img,t}}{S^{l}_{txt,t}}.

\]

Intuitively, when this ratio is large, the generated target image might diverge too much from the textual instruction, indicating under-editing, while a small ratio implies over-editing of unfaithful fidelity to the source image.

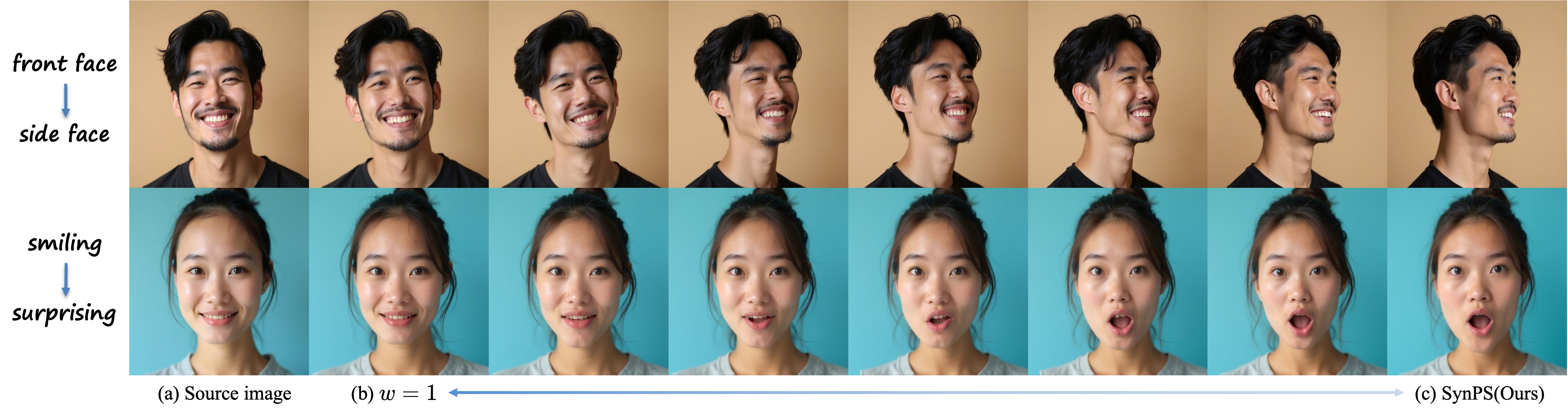

Then, we propose an attention synergy pipeline that dynamically adjusts the effect of position embeddings according to the stepwise editing measurement. We exploit the property of RoPE, which encodes relative displacement between tokens. We introduce a scaling factor w ∈ [0, 1] applied to the position IDs of query and key tokens, effectively scaling the rotation angles and the relative distance. This creates a continuous spectrum of control: w=1 preserves full positional constraints to prevent deformation, while w=0 renders attention position-agnostic to allow semantic changes.

The weight w is adaptively determined by the editing measurement Mt+1 via a piecewise linear function:

\[

w=

\begin{cases}

0, & \text{if } M_{t+1} > M_{\max},\\

1, & \text{if } M_{t+1} < M_{\min},\\

\dfrac{M_{\max}-M_{t+1}}{M_{\max}-M_{\min}}, & \text{otherwise.}

\end{cases}

\]

Specifically, when Mt+1 is high (indicating under-editing), we relax positional constraints (w → 0) to facilitate semantic adherence; conversely, when Mt+1 is low, we enforce structure (w → 1) to avoid over-editing.